AI-powered chatbots have quickly become a key part of customer service. They’re often the first point of contact and the “face” of many organizations. Companies love them because they handle large volumes of questions, provide personalized recommendations, and scale services efficiently.

But behind these efficiencies lies a growing concern: chatbots collect and process large volumes of data – sometimes more than users realize or consent to. If privacy, security, and governance aren’t taken seriously, both users and organizations face significant risk.

As chatbots become a daily tool for many companies, regulators are stepping in to make sure these technologies don’t outpace privacy and security protections.

Regulatory landscape in Canada: Bill C-27, CPPA & AIDA

In Canada, reform efforts, including Bill C-27, which proposed the Consumer Privacy Protection Act (CPPA) and the Artificial Intelligence and Data Act (AIDA), were once gaining momentum to modernize how AI and sensitive personal data are regulated.

These proposals aimed to require organizations to identify and manage risks associated with AI systems (especially those used in sensitive areas like customer service or automated decision-making) and to strengthen rule around consent, transparency, and data protection. However, as of now, these reforms have not yet become law in their original form.

Provincial progress: Quebec’s Law 25 and movement across other provinces

While organizations wait for federal reform, they still need to operate under existing laws and keep and eye on provincial and international standards.

Quebec’s Law 25: Privacy and automated decision-making requirements (private sector)

Under Quebec’s Law 25, private-sector organizations using personal information for fully automated decisions must:

- Notify affected individuals whenever a decision is made solely through automated processing. This applies only when the decision is made exclusively by automated process. If there is human involvement, the provision may not apply.

- Provide access to the information used to make automated decisions, including the main factors, rules, or parameters that influenced the outcome.

- Allow individuals to correct personal information if it is accurate.

- Enable individuals to submit observations or request a review by an authorized person within the organization.

- Use personal information only for the original purpose for which it was collected.

- Destroy or anonymize personal information once its purpose is fulfilled, unless retention is required by another law.

- Implement appropriate security and confidentiality measures based on the sensitivity, quantity, and medium of the personal information processed.

Movement in other provinces

In Canada, several provincial privacy regulators, including those in British Columbia and Alberta, have issued joint principles for the responsible and privacy-protected development and use of generative AI.

In Ontario, the government’s Responsible Use of Artificial Intelligence Directive (effective December 1, 2024) obliges ministries and agencies to apply risk-management and transparency practices before deploying AI tools.

While Quebec remains the only province with AI-specific legislation governing automated decision-making by private sector organizations, these developments illustrate growing regulatory and oversight attention to AI and data protection in multiple jurisdictions across Canada.

International standards: GDPR and the EU AI Act

In the European Union, the General Data Protection Regulation (GDPR) remains a gold standard for privacy. It emphasized lawful data use, explicit consent, limiting data collection, and protecting data when chatbots handle personal information, profile users, or operate internationally. Additionally, the AI Act adds additional obligations, such as mandatory risk assessments for AI systems interacting with the public, human oversight for high-risk applications, strict record-keeping and monitoring, and transparency obligations for chatbots to make clear to users they are interacting with an AI system.

Together, GDPR and the AI Act set the trajectory for how global regulators expect AI-driven customer service systems to operate.

What’s clear from both Canada (for what’s coming) and the EU (already in force) is this:

- AI and automation need to be transparent, accountable, and properly risk-assessed

- Data collected by chatbots should be minimized, secured, and carefully managed

- Users need to know how their data is used, and have real control over it

- Users must give informed and explicit (under GDPR and Quebec’s Law 25) consent for how their data is collected and used and if this information is used for secondary purposes

- Data should only be used for clear, specific purposes (purpose limited)

- Data should not be kept longer than necessary; retention policies and deletion/disposal practices matter

Emerging privacy and security risks in chatbots

As AI chatbots continue to evolve, they bring with them a range of privacy and data-security challenges alongside their innovative capabilities. Beyond security concerns, the privacy risks associated with chatbots are becoming increasingly significant.

Each chatbot essentially represents a new entry point for data, potentially for malicious actors. Because they interact directly with users, chatbots may collect more data than necessary or more than they are authorized to gather.

If they are not properly secured, a chatbot could unintentionally expose organization’s sensitive information, potentially leading to cyber-attacks or reputational harm. An improperly secured chatbot could also expose internal system details or configurations, which may create pathways for attackers to exploit. Together, these risks increase the likelihood of cyber attacks, data leakage, and reputational harm.

Together, these risks can increase the likelihood of cyber attacks, data leakage, and reputational harm.

Key questions organizations must ask themselves

- What data is collected and for what purpose?

- What data is available to the chatbot?

- What data is the model trained on?

- Who has access to the data collected/processed by the chatbot?

- Is this data stored in compliance with internal policies and relevant regulations (and in Canada’s case, keeping an eye on future reform)?

- What safeguards are in place to prevent unauthorized access?

- What is the response plan if a breach occurs?

Unfortunately, these considerations are often overlooked in the urgency to deploy new technologies. Addressing them requires more than simply identifying and remediating gaps; it necessitates a proactive and forward-looking approach to risk.

Leading organizations integrate privacy and security into their chatbot programs from the outset, rather than treating them as corrective measures after an issue has occurred.

Privacy and security by design: Building trust from day one

Designing with privacy and security in mind means anticipating privacy and security risks before they appear. It looks like building safeguards that are part of how the technology works and giving users confidence that their information is handled responsibly and ethically. It’s not just about compliance with legislation – it’s about building trust and resilience into every interaction.

How each organization brings this to life looks a little different and that’s where the real opportunity lies. The right design choices can strengthen governance, simplify compliance, and even enhance the user experience.

Preparing for autonomous AI agents: Why governance matters more than ever

In addition to privacy and security concerns, chatbots introduce new complexities in data governance. With vast amounts of data generated in real time, organizations need clear strategies for managing, storing, and ultimately disposing of that information.

As AI evolves, autonomous agents raise the stakes even further. Unlike chatbots, agents can act on data, trigger processes, and learn continuously. This expanded capability means governance must address data volume but also decision-making transparency, lifecycle management, and safeguards for actions taken by these systems.

Here are a few ways you can prepare:

- Define and disclose purpose: Clearly state why data is collected and enforce strict controls on how it’s used to maintain transparency.

- Implement retention policies: Well-defined schedules prevent holding sensitive data longer than necessary, reducing breach and compliance risks.

- Apply robust safeguards: Secure data management practices ensure information is only kept as long as it serves its intended purpose.

- Strengthen governance for complexity: As chatbot datasets grow, structured processes for securing, maintaining, and understanding data are critical for privacy and compliance.

The call for proactive privacy and data security management

These challenges aren’t roadblocks, but opportunities to strengthen your organization’s data governance. By tackling the privacy, security, and data issues related to AI chatbots now, businesses can better protect their customers and safeguard their reputation over time.

Taking a proactive approach to AI privacy and security is essential. This mean:

- Establishing governance and clear policies and risk assessment methodologies

- Maintaining transparency around consent

- Implementing the right technological protections

- Testing and monitoring the models to ensure they work as designed

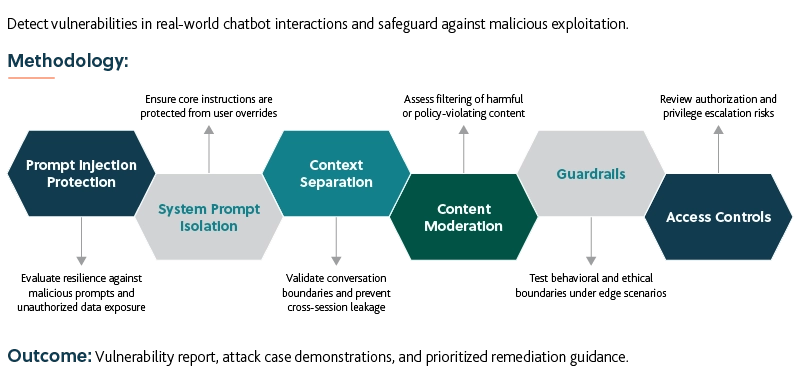

The methodology diagram below illustrates a structured approach to detecting real-world risks in chatbot interactions and to implement safeguards against malicious exploitation, supporting the objective of keeping models secure and reliable.

Given the rapid evolution of AI and the complexity of associated risks, organizations often need expert guidance to ensure privacy, security, and compliance are maintained.

How we can help: Guiding organizations through AI privacy and security

We specialize in guiding organizations through the complex intersection of AI technology, data privacy, and security. Our experienced advisors can help assess your chatbot implementation, provide insights into data-governance best practices, and ensure you’re aligned with current regulation while also preparing for future reform.

While there’s no one-size-fits-all solution, it’s crucial to start the conversation now, before the risks catch up with your business. Reach out to our advisors today.

Connect with us to get started

Our team of dedicated professionals can help you determine which options are best for you and how adopting these kinds of solutions could transform the way your organization works. For more information, and for extra support along the way, contact our team.