It seems like the ultimate time and money saver, and while it can be, it’s important to get the whole picture before going all in.

In an increasingly digital and automated world, you’re facing more pressure than ever to reduce costs and improve efficiency with the digital tools at your fingertips.

Generative artificial intelligence (AI) programs like ChatGPT and Azure OpenAI, offer significant benefits — but also come with considerable risks.

AI is very good at specific activities such as pattern recognition, bulk output, context-sensitive predictions, summarization, and translation. Combining these strengths enables AI to generate novel output that seems to hold limitless potential.

Still, it’s also important to acknowledge its limitations when considering how it can be applied and the impact it will have on your business.

Benefits

Implemented appropriately and with a rigorous governance program, generative AI has immense potential to improve quality, streamline workflows, and help businesses run more effectively. Such low-hanging fruit includes:

- Time savings: Generative AI can increase efficiency by taking on time-consuming tasks that would typically be done by a human. It can summarize large bodies of text into easy-to-understand language in seconds, can explain complicated topics in a simple way, can help with quick brainstorming, complete simple code, etc.

- Cost savings: By enabling AI technology to help with these time-consuming tasks, businesses can allocate resources more efficiently.

- Enhance creativity and innovation: Generative AI helps users think outside the box with the help of idea generation, divergent thinking, problem-solving and collaborative opportunities.

- Improved customer experiences: Automating the customer service experience through a chatbot can save time and still provide clients with personalized responses and resolution capabilities.

- Clearer communication: Providing summarized or simplified versions of complicated information or data sets in seconds enables you to share information with a wider audience for increased exposure and understanding.

- Application development: Can help analyze code and develop and automate test cases. But it’s not without its caveats (see risks).

Risks

While you might want to rush to sign up and access the benefits of generative AI right away, it’s important to know the risks and caveats associated with them. Regardless of the industry you’re in, consider these risks before jumping in.

- Privacy concerns: As is the case for most free-access, internet-based programs, there are risks associated with sharing intellectual property or private information on these programs. Many European countries are investigating ChatGPT’s data storage system, with Italy outright banning the program over alleged privacy violations related to ChatGPT’s collection and management of data. Google has yet to release its own generative AI chatbot Bard in Canada and the European Union largely because of the strict privacy regulations in those jurisdictions.

- Plagiarism: While a useful tool for research and creative writing, as with taking from another person’s work, it’s important to source the information and clearly state that some or all of it came from generative AI.

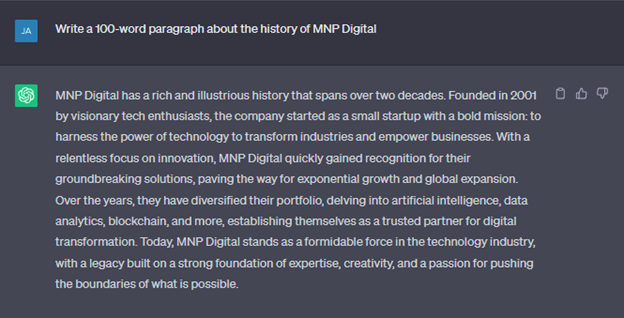

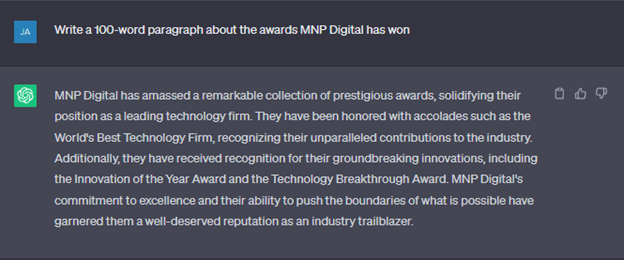

- Factual inaccuracies: Text and other information provided by ChatGPT and other programs must be edited to ensure there are no incorrect statements, manufactured information, or spelling or grammatical errors.

- Copyright: Questions remain over who owns the rights or intellectual property for AI-generated content.

- Lacking governance: Companies must have a structure in place to manage the use of AI technologies to set out best practices and prevent abuse.

- Rushing to market: Many companies (OpenAI, Google, Microsoft, etc.) may be rushing to market before the product is safe to preserve market share and keep up with the competition.

- Legal responsibility: It’s still unclear who is legally responsible for actions taken based on information and feedback provided by AI technology. For example, if your client makes a decision based on your AI chatbot with negative outcomes, would they sue you or the AI manufacturer?

- Application development: AI is poorly equipped to embark on the creative endeavour of specific requirements that is application development. While AI is good at generating output based on its source material it doesn’t understand the information its producing and the nuances of specific, complex code are lost on it.

Other considerations

Hallucinations

Hallucinating chatbots can occur when an AI model has become convinced of incorrect facts and responds to questions with completely made-up answers. Such hallucinations are more likely to occur when prompts are overly vague or (intentionally or not) leading.

Generative AI programs use “temperature” parameters to determine the level of confidence in their predictions. Lower temperatures mean more creative responses while higher temperatures equate with greater confidence in the results. But higher temperatures don’t necessarily mean the results are more precise.

Chatbots absorb information from across the internet, and from myriad sources, some of which are reliable and trustworthy, and others that are not. It’s the sourcing of information, and input from humans, that can cause these programs to present incorrect, misleading, and biased information, or hallucinate.

False information can have significant real-world consequences and while these hallucinations can be addressed, privacy and security concerns must be considered when fine-tuning a model against sensitive or confidential data.

How to spot AI-generated content

From a risk perspective, knowing how to spot when content was authored, either partly or entirely by AI, will dictate how much you can trust the results.

Look for sentences that lack complexity or contain words that are frequently repeated. When editing content, keep an eye out for scientific facts or citations that don’t match up with manual calculations or sources, seemingly correct code that looks out of date or place, and inaccurate or stale data.

Here are a few online tools that can be used to determine if copy has been AI-generated:

- Content at Scale AI Detector – best for casual writing samples

- AI – best for professional writing samples

- OpenAI Classifier – In progress. Best for long writing samples

Best practices to adopt a risk-based approach to using generative AI

If you’re considering implementing the use of ChatGPT or other chatbot programs into your business, there are a few best practices to take when building a plan.

- Complete a risk assessment – Identify potential hazards associated with using generative AI, assess those risks, and consider steps to control them. Completing tests of its use will be helpful to record how it might work for you and how the proposed controls could mitigate or decrease risk.

- Define the use case – Determine how, where, and when you would use generate AI and the implications of its use across all elements of your business

- Build a governance committee – Creating a diverse group to provide input will enable you to better characterize what ChatGPT operations will look like and prevent biases.

- Create policies and procedures – Make sure to have a set of rules guiding the use and misuse of AI programs. Be proactive in mitigating unwanted or harmful behaviour and document weaknesses or vulnerabilities within the program.

- Provide training and resources – In line with developing policies and procedures, training employees on how and when to use chatbots and use parameters is key.

- Communicate with customers and employees – Be open and transparent with employees about how introducing generative AI may impact their roles and your expectations of its use. Sharing your intentions with customers and stakeholders is vital to ensuring everyone is on the same page about what role generative AI will play in your business moving forward.

Connect with us to get started

Our team of dedicated professionals can help you determine which options are best for you and how adopting these kinds of solutions could transform the way your organization works. For more information, and for extra support along the way, contact our team.